Chefy

Your cooking assistant - an Alexa skill

VOICE UI DESIGN

Project info

The project took 3 months to complete (UX and UI design).

The team on this project included:

Adva Levin (Mentor)

Methods

Competitor Analysis

User Stories

Personas

Placeonas

Tools used

Amazon Echo

ASK (Alexa Skills Kit)

Adobe XD

Voiceflow

Lucidchart

Sketch

Marvel App

The challenge

When we cook, our hands are busy. If we want to use an online recipe or a recipe from a book, we can’t easily look through it without making it dirty. To simplify this experience I decided to design an Alexa Skill called Chefy. Chefy helps one cook and use recipes by using their voice.

My approach

I looked at some Alexa skills competitive to Chefy. I paid attention to invocation names (how the interaction gets started), intents (the ‘instructions’ accepted by the skill on which it was designed to act upon), prompts (what type of questions it provides to the user to continue the conversation), and how are the responses provided by the users handled by those skills.

In order to have a clear path in mind, I also designed the following business requirements:

Business requirements

1. Allow users to access via voice.

2. Increase the percentage of returning users.

Research

Target audience. This Alexa Skill has been designed for busy individuals, age 18–35 (all genders) who are familiar with using voice-controlled devices. They’re interested in quick meal preparation, and wish for recipe inspiration. They include working parents (mums primarily) who, after coming home from work, still need to prepare a meal for their hungry child/family; as well as students who need a quick snack between their study times.

The choice of my audience was based on my observations of TV cooking ads, which primarily target young women: who shop, and then prepare meals. Also, having been a student myself and interacting with other students, I concluded that this demographic can also benefit from help in the kitchen: since students need to eat, have limited time to make meals, and are on a tight budget, my Alexa Skill could be a great kitchen companion for them too.

User persona

System persona

Interviews

I also conducted a few interviews with my potential audience. In my screening, I made sure I only work with participants have done cooking in the past (or do it currently) as well as use voice assisted technology (e.g. Siri or Google on their phone, Alexa or Google Home at home, etc). I decided to divide my interview into three sections:

Cooking. Here, I asked questions related to the general act of cooking: whether they cook, if they like/dislike doing it, how often they do it, and where they get inspiration for their meals.

Recipes. In this part of the interview I wanted to learn whether my interviewees have ever used any recipes. If so, in what form (online, cookbooks, magazines, etc), as well as how they usually follow a recipe. I also tried to learn whether my users use any voice enabled technology to support their cooking (e.g. setting a countdown).

Environment. Since cooking usually takes place at home, in the kitchen, I wanted to learn with whom is my participant with when they cook. Is their environment noisy or quiet? This would have helped me understand the environment for my Alexa Skill.

Interview findings

I love working with participants. The wealth of information I always get is astounding. In this case, they helped me understand what my potential users care about when cooking. After my interviews a few patterns emerged that helped me narrow down what features I should implement into my skill first. They were:

Interview findings

Feature #1. Finding recipes by the main ingredient

Two of my interviewees often don’t know what to cook but make their decision based on what they have in the fridge. Then, they Google for recipe suggestions based on that one ingredient they have.

Feature #2. Ability to favourite recipes

Some users said that after they find a new recipe they like, they usually keep it and cook it again in the future. This saves them time coming up with new meals throughout the week.

Feature #3. Ability to find an ingredient substitute

Three out of five people I interviewed said they’d use a substitute of an ingredient they don’t have. They’d either use Google to find that substitute they have in the fridge. The remaining two said they’d either pause their cooking and go to a shop to get it or stop cooking altogether (these were people who were less experienced at cooking, i.e. in general, spend 2 – 3 times a week cooking).

Feature #4. Ability to specify a party number and meal type

Enable users to specify the number of people they’re cooking for.

I also decided to let the users obtain recipes for breakfast, lunch, dinner, and snacks whilst keeping recipes very simple. Three of my participants said they find using some recipes frustrating as either they’re long (especially when using a voice assisted device) and hard to follow, or are inaccurate (one participant said they realised at the end of their recipe that their lamb should be put in the oven “for a very long time” – without no time length given).

Voice user flow

To understand how users would use Chefy whilst cooking, I created a user flow. It maps out how all the intents in a skill are related to one another; what a system can do as well as how a system will respond to various inputs. This flow was designed for a quick overview of what a user can do as well as the relationship between those different actions. To learn what the user and system would actually say, you can view the corresponding script on this page.

Voice user flow

Usability testing

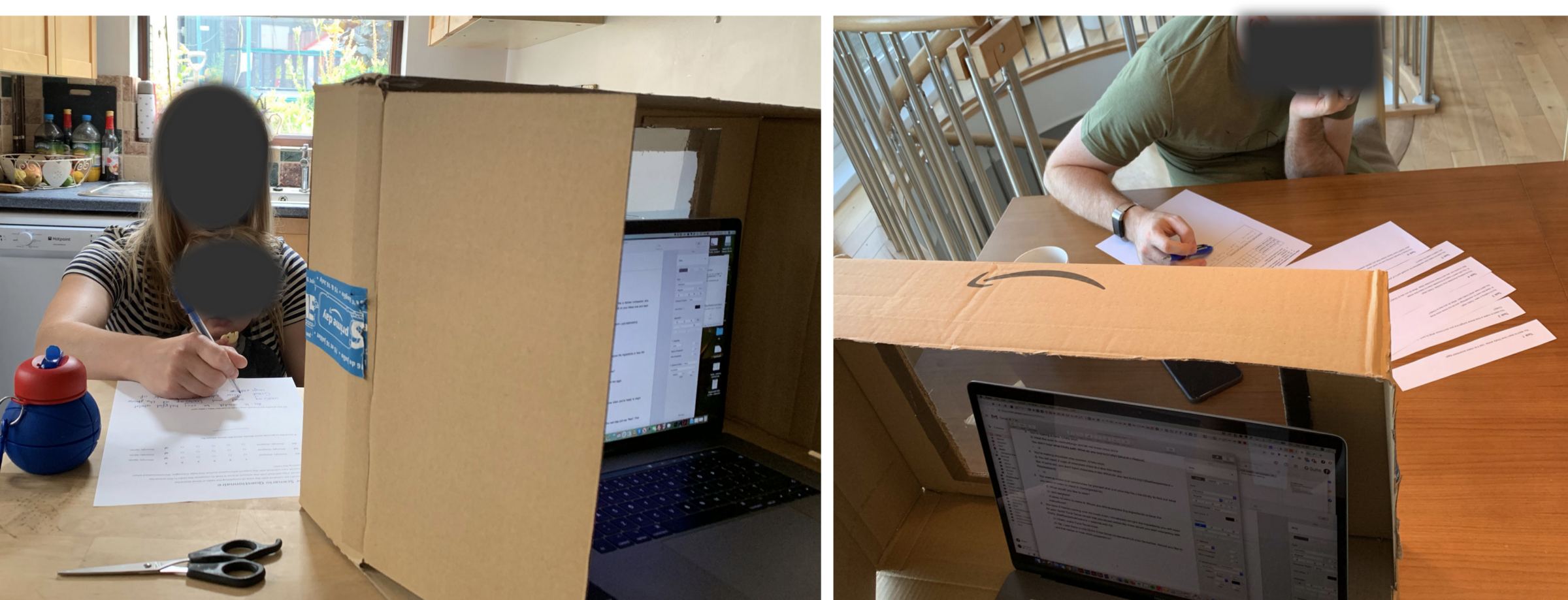

For both on-site and off-site usability testing I used a wizard (downloaded from this GitHub page) that was designed for running a Wizard of Oz voice interface test.

On-site testing

Location: in the participant’s kitchen.

Equipment: cardboard, black material, plastic sheet, one way mirror glass film, double-sided tape, scissors, iPhone, tripod.

Conducting the test: I built a mockup of a wall that acted as a divider between me and the participants. Some scenario tasks were provided to the participants so they could familiarise themselves with what I needed them to do. Each time they asked Chefy for something, the wizard responded. There was a tripod with my phone set up so it could record my participants’ interaction with the system.

A large Amazon box had a hole cut out. The, I attached a reflective window film to a plastic sheet, and placed it over the hole. And done! :)

Off-site testing

Location: the software I used to conduct this usability test was appear.in and Facebook’s Messenger.

Conducting the test: Since Alexa users can’t see Alexa when interacting with her, my participants also could not see me during the testing. To analyse how my participants were behaving with the system I did some screen recording.

Testing and Tasks

This usability testing was conducted both on-site (with 3 participants) at the participants’ homes, and off-site (with 2 participants) via appear.in (currently whereby.com) and the Facebook’s Messenger app.

On-site usability testing (in participants’ kitchens)

The following scenario was read to the users before the usability testing started:

“A friend of yours recommended an Alexa Skill called ‘Chefy’. This skill is like a kitchen companion who suggests tasty meals as well as helps you make them. You’ve enabled this skill on your Alexa now and want to try it out in your kitchen.”

You want to learn how Chefy works. Use it to make scrambled eggs [No Intent = exploration].

You want to make a nice dinner tonight but you don’t know what to cook. How would you use Chefy?

You’re making a cake. Chefy says:

S: Heat the oven to <something> and let me know once done.

You didn’t hear what Chefy said. What do you say to Chefy? [Global = Repeat]

You’re making chocolate chip cookies. Chefy says:

S: You will need 2 cups of chocolate chips to make this recipe.

But, it turns out, you don’t have chocolate chips. What do you say to Chefy? [GetRecipeIntent = Replacement]

You want to make club sandwiches for yourself and 3 of your friends. Use Chefy to find out what you need in order to make it. [GetIngredients]

You have 2 friends coming over for lunch soon. You already bought the ingredients you will need for your Quick Tuna Tacos recipe that you saved yesterday. How would you start interacting with Chefy. [GetRecipeInstructions = cooking activity]

Results

To organise my recordings, I used affinity mapping and a Rainbow Spreadsheet. I used the Severity Ratings Scale to rate the severity of the usability problems I found. Then, I grouped my findings into 4 categories:

Catastrophe: imperative to fix

Major problem: important to fix

Minor problem: important to fix but low priority

Cosmetic: if time allows, it can be fixed

Proposed solutions

1. Improvement to the prompts’ design

The prompts should be less vague and designed to provide a limited number of answers. The options should be clearly presented so that it is clear to the users that it is an either/or question (and not a ‘yes/no’ question).

2. Improvement to the instructions on a conversation flow

Clarify how the users of this skill should request information from Chefy whilst using it.

3. More responses should be recognised

Add more variations of responses to this Skill, e.g. for the ReplacementIntent there should be: ”What (else) can I use?”, “What do I need?”, ”I don’t have any”; “What can I use instead?”.

4. Improvement recovery from users’ errors

Chefy should repeat what she heard, and repeat it back to the user for clarification, e.g. “Sorry, how many people are we cooking for?”

5. Improvement to the conversation flow

If the system says, e.g. “Would you like to continue cooking or hear a replacement?”, Chefy should support the following response from the user: “What can I use?” Or, alternatively, provide a better guidance to the users on how they should hold a conversation with Chefy.

6. Improvement to problem solving

Make Chefy volunteer solutions to a problem, e.g. say that they can cook without that ingredient, or suggest a replacement but mention that in each case the meal could taste differently.

7. Improvement to voice interactions

Improve Chefy’s voice quality, speed and intonation. Make it easy to follow and pleasing to the ear.

Usability testing - follow up

After I iterate on my current experience, these are the changes I’d introduce to my next Chefy usability test:

Provide more context on each task I ask my participants to complete. That way, they’d know how they should be thinking about their interaction with the system.

Provide steps on recipes they are not familiar with. That way, I avoid them skipping this step as they would not know what ingredients are needed for a meal in question (as opposed to “scrambled eggs’ in my Task 1).

Do more piloted testing before the actual usability testing. This is to ensure the tasks are clear and the users know exactly what is expected of them.

Create a better Wizard of Oz experience. For instance, via Voiceflow.

Final thoughts

Designing conversational UI is not easy. As in a real conversation there is a very narrow margin of error, i.e. if we ask another person a simple question, we expect to be understood and provided with an answer. If we ask the same question of them again, we expect the same answer but spoken using different words, and sentence structure. Most importantly, we expect to be understood. Since a bot or a voice assistant are not people – but speak using a human-like language – we must accommodate for the limitations of these technologies.

Michael Beebe, a former CEO of Mayfield Robotics said:

“When something speaks back to you in fluent natural language, you expect at least a child’s level of intelligence… So setting that expectation right keeps it more understandable.”